AI and information retrieval

AI tools can speed up and simplify scientific information retrieval. At the same time, it is important to be aware of the risks of inaccurate information and fictitious references. On this page you will learn about what AI search tools are and what you should consider when using them.

Artificial intelligence (AI) is a broad term, and some technologies have been in use for a long time, such as relevance ranking and recommendations for similar content. With ChatGPT, a tool based on generative AI and natural language processing became widely available.

Generative AI

Generative AI means that AI is used to generate digital content. The digital content can be text, images, music or some other material.

Generative AI is to a great extent largely based on probability. If a text starts like this, how would it most likely continue? Which words and sentences tend to occur together? Based on this, a unique text is generated. Because it contains common combinations of words and sentences, the content will look plausible and credible. Factual information will often be correct, but can also be completely wrong. This is known as “AI hallucination.”

AI tools that rely entirely on generative AI cannot provide sources for the information. The AI tool has not searched for information but only generated a text. Sometimes the AI tool can provide references, but often they are fictitious references that do not exist in reality.

In an academic approach, citing and checking sources is important. It is important to know where information originally comes from and to be able to go back and check the sources on which a text is based. Without specified sources, this will not be possible. Generative AI is therefore not suitable for scientific information retrieval.

You should be aware that the factual information you get may be inaccurate in other contexts too. How much that is inaccurate varies. For well-established information found in many sources, the percentage of incorrect answers will be less than for specific or controversial topics.

AI tools for searching

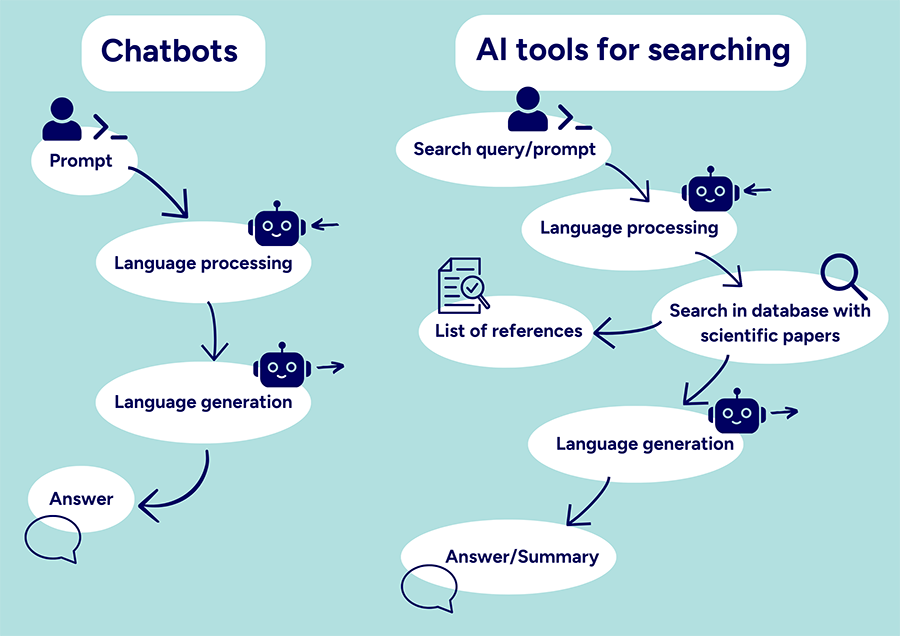

Some AI tools also perform searches on the web or in a database of publications. The search results can be delivered as it is, or used as the basis for a generated text response. Such a response may contain information on sources and be useful in academic contexts.

The chatbots Bing and Bard search the internet and generate a response using AI. Tools such as Elicit, Keenious and Semantic scholar combine searching databases of scientific publications with AI. AI is used in various ways, for example to interpret natural language, select and rank search results, or generate an answer or summary.

There are also tools that combine citation analysis with AI, and can present networks between scientific publications. Some examples are Connected papers, Inciteful and Research rabbit.

Training data

Most AI tools have been trained on large amounts of data. The data used and the size of the data set will have a major influence on how the tool works. The data is usually limited in time and new data may be missing. For example, the ChatGPT training data does not include documents newer than 2021.

Data can also be biased in various ways. For example, in facial recognition services, it is common for the training data to contain mostly images of white men, and therefore perform less well on black women.

Most AI tools do not clearly indicate which data was used as training data, which is a disadvantage in academic contexts where transparency is important.

Machine learning

Many AI tools continue to learn as they are used. This means that they will get better and better at performing tasks. It also means that they will not give the same results if you give them the same task after some time. The reproducibility of research, i.e. that studies can be reproduced by someone else and come to the same result, is usually considered as an important principle. Lack of reproducibility can therefore be problematic when using AI tools in research.

Terms of use and copyright

Many AI tools save your input and use it in various ways, including as training data. Read the terms of use of each service to find out more, and be careful if you, for example, enter someone else's material or sensitive data.

Copyright only applies to material created by natural persons. AI-generated images and text are therefore not covered. Noone has copyright on AI-produced material. There may however be restrictions on how the material can be used. These restrictions can be found in the terms of use you accepted when you created an account for the service.

Cheating and plagiarism

Before using AI tools in your studies, you need to check if there are any guidelines for it in the course in question. In general, you should never submit a piece of work as your own unless you have substantially completed it yourself. For the sake of transparency, it can be good to state which tools you have used, and in what way. KTH's pages on cheating and plagiarism contain more information.

AI tools as a reference

Practice varies as to whether AI tools should be cited as references. They are not sources in the traditional sense and according to some recommendations they should rather be mentioned as tools used. If you need to reference AI tools, you can sometimes find examples of how to do so in the guide to the referencing style you are using.