Area 4: Embodied Representation Learning

In this area we develop methodologies for robots, cars, dialogue systems and other autonomous agents to perceive the world and create meaningful computer representations of it through sensors, primarily vision. Many projects overlap with Areas 1-3 in their application focus. My earlier research concerned affordances, object-action recognition and robot learning from human demonstration. Recent projects have a more general Computer Vision and Machine Learning focus, often with medical applications.

In this area we develop methodologies for robots, cars, dialogue systems and other autonomous agents to perceive the world and create meaningful computer representations of it through sensors, primarily vision. Many projects overlap with Areas 1-3 in their application focus. My earlier research concerned affordances, object-action recognition and robot learning from human demonstration. Recent projects have a more general Computer Vision and Machine Learning focus, often with medical applications.

People

Research Engineers

- Gianluca Volkmer (2023)

- Athanasios Charisoudis (2022-2023)

- Ricky Molén (2022)

- Carles Balsells Rodas (2019-2021)

- Chenda Zhang (2020)

- Moein Sorkhei (2018-2020)

- John Pertoft (2016)

BSc Students

- Ricky Molén (BSc 2021)

- Ruben Rehn (BSc 2021)

MSc Students

- Kirill Pavlov

- Erik Sik

- Athanasios Charisoudis (MSc 2024)

- Luis González Gudiño (MSc 2023)

- Ruibo Tu (MSc 2018)

- Charles Hamesse (MSc 2018)

PhD Students

- Gustaf Tegnér (co-supervisor)

- Ruibo Tu (PhD 2023, now in the STING project)

- Marcus Klasson (PhD 2022, now at Aalto University, Finland)

- Püren Güler (co-supervisor, PhD 2017, now at Ericsson Research, Sweden)

- Cheng Zhang (PhD 2016, now at Microsoft Research, UK)

- Alessandro Pieropan (PhD 2015, now at Univrses, Sweden)

- Javier Romero (co-supervisor, PhD 2011, now at Reality Labs Research, Meta, Spain)

Post Docs

- Neeru Dubey (from 2024)

- Benjamin Christoffersen (2020-2021, now at Karolinska Institutet, Sweden)

- David Geronimo (2013-2014, now at Catchoom Technologies, Spain)

Collaborators

- Hossein Azizpour

- Pawel Herman

- Mark Clements (Karolinska Institutet, Sweden)

- Pier Luigi Dovesi (Silo AI, Sweden)

- Keith Humphreys (Karolinska Institutet, Sweden)

- Anna Rising (Swedish University of Agricultural Sciences, Sweden)

- Cheng Zhang (Microsoft Research, UK)

Permanent Activity

MSc projects at KTH in collaboration with industry (since 2007)

|

Many MSc projects at KTH are conducted in collaboration with industry or other universities and research institutes. I have the pleasure of examining or supervising a number of such projects every year. Such external collaborations are always stimulating, and often lead to publications and further collaboration. Recent publications with external MSc students

|

Current Projects

Deep representation learning from sparse data (SeRC 2023-present)

|

This project, which is part of the SeRC Data Science MCP, operates in interaction with OrchestrAI in Area 1, Detecting behavioral biomarkers, UNCOCO and STING in Area 2, ANITA in Area 3, and Generative AI for the creation of artificial spiderweb in Area 4 in developing generative AI methods to model and recognize human non-verbal behavior from 3D body, face and hand pose, gaze behavior and RGB video, in context of language, knowledge representations and EEG. Publications |

Generative AI for the creation of artificial spiderweb (WASP, DDLS 2023-present)

|

This project is a collaboration with a group in veterinary biochemistry at the Swedish University of Agricultural Sciences, whose goal is to create artificial spider web - a protein based, highly durable and strong material. Our contribution to the project is to develop deep generative methods for predicting material properties given the protein composition in the raw material. Publications |

HiSS: Humanizing the Sustainable Smart city (KTH Digital Futures 2019-present)

|

The overarching objective is to improve our understanding of how human social behavior shapes sustainable smart city design and development through multi-level interactions between humans and cyber agents. Our key hypothesis is that human-social wellbeing is the main driver of smart city development, strongly influencing human-social choices and behaviors. This hypothesis will be substantiated through mathematical and computational modelling, which spans and links multiple scales, and tested by means of several case studies set in the Stockholm region as part of the Digital Demo Stockholm initiative. Publications

|

Past Projects

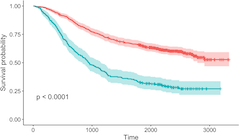

Variational Approximations and Inference for survival analysis and joint modeling (SeRC 2019-2022)

|

This project, which is part of the SeRC eCPC MCP, is a collaboration with the Biostatistics group at the Department of Medical Epidemiology and Biostatistics. Together we develop Variational methods for more accurate prediction of survival probability. Publications

|

Automatic visual understanding for visually impaired persons (Promobilia 2017-2022)

|

In this project, we aim to build an automatic image understanding system that can perform real-time robust image understanding to aid visually impaired persons. Publications

|

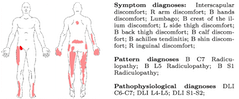

Causal Healthcare (SeRC, KTH 2016-2022)

|

In this project, which is part of the SeRC Data Science MCP, we develop Machine Learning methods that discover causal structures from medical data, for automatic decision support to medical doctors in their work to diagnose different types of injuries and illnesses. The purpose is to determine the underlying causes of observed symptoms and measurements, making it possible to semi-automatically reason about the potential effects of different actions, and propose suitable treatment. Publications

|

AIVIA: AI for Viable Cities (VINNOVA 2019-2020)

|

This is a subproject of the VINNOVA project Viable Cities, where we wrote a series of popular scientific essays on AI and cities of the future. Magnus Boman. Att framtidsskriva en stad, Medium, September 11, 2019. Magnus Boman. Strategier för utveckling av framtidens artificiella intelligens, Medium, October 3, 2019. Hedvig Kjellström. Från Big Data till Small Data - data är inte gratis, Medium, October 17, 2019. Magnus Boman. Ghost work powering AI-based services, Medium, January 13, 2020. Hedvig Kjellström. Singulariteten... Är det verkligen den mest angelägna frågan?, Medium, February 5, 2020. |

SocSMCs: Socialising SensoriMotor Contingencies (EU H2020 2015-2018)

|

As robots become more omnipresent in our society, we are facing the challenge of making them more socially competent. However, in order to safely and meaningfully cooperate with humans, robots must be able to interact in ways that humans find intuitive and understandable. Addressing this challenge, we propose a novel approach for understanding and modelling social behaviour and implementing social coupling in robots. Our approach presents a radical departure from the classical view of social cognition as mind- reading, mentalising or maintaining internal rep-resentations of other agents. This project is based on the view that even complex modes of social interaction are grounded in basic sensorimotor interaction patterns. SensoriMotor Contingencies (SMCs) are known to be highly relevant in cognition. Our key hypothesis is that learning and mastery of action-effect contingencies are also critical to enable effective coupling of agents in social contexts. We use "socSMCs" as a shorthand for such socially relevant action-effect contingencies. We will investigate socSMCs in human-human and human-robot social interaction scenarios. The main objectives of the project are to elaborate and investigate the concept of socSMCs in terms of information-theoretic and neurocomputational models, to deploy them in the control of humanoid robots for social entrainment with humans, to elucidate the mechanisms for sustaining and exercising socSMCs in the human brain, to study their breakdown in patients with autism spectrum disorders, and to benchmark the socSMCs approach in several demonstrator scenarios. Our long term vision is to realize a new socially competent robot technology grounded in novel insights into mechanisms of functional and dysfunctional social behavior, and to test novel aspects and strategies for human-robot interaction and cooperation that can be applied in a multitude of assistive roles relying on highly compact computational solutions. My part of the project comprised human motion and activity forecasting. Publications

|

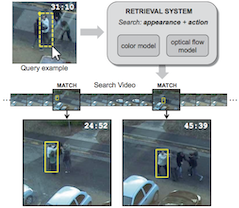

FOVIAL: FOrensic VIdeo AnaLysis - finding out what really happened (VR, EU Marie Curie 2013-2016)

|

In parallel to the massive increase of text data available on the Internet, there has been a corresponding increase in the amount of available surveillance video. There are good and bad aspects of this. One undeniably positive aspect is that it is possible to gather evidence from surveillance video when investigating crimes or the causes of accidents; forensic video analysis. Forensic video investigations are now carried out manually. This involves a huge and very tedious effort; e.g., 60 000 hours of video in the Breivik investigation. The amount of surveillance data is also constantly growing. This means that in future investigations, it will no longer be possible to go through all the evidence manually. The solution is to automate parts of the process. In this project we propose to learn an event model from surveillance data, that can be used to characterize all events in a new set of surveillance recorded from a camera network. Our model will also represent the causal dependencies and correlations between events. Using this model, or explanation, of the data from the network, a semi-automated forensic video analysis tool with a human in the loop will be designed, where the user chooses a certain event, e.g., a certain individual getting off a train, and the system returns all earlier observations of this individual, or all other instances of people getting off trains, or all the events that may have caused or are correlated with the given "person getting off train" event. Publications

|

RoboHow (EU FP7 2013-2016)

|

Robohow aims at enabling robots to competently perform everyday human-scale manipulation activities - both in human working and living environments. In order to achieve this goal, Robohow pursues a knowledge-enabled and plan-based approach to robot programming and control. The vision of the project is that of a cognitive robot that autonomously performs complex everyday manipulation tasks and extends its repertoire of such by acquiring new skills using web-enabled and experience-based learning as well as by observing humans. My part of the project comprised object tracking and visuo-haptic object exploration. Publications

|

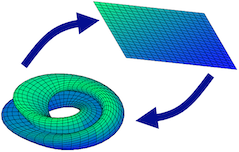

TOMSY: TOpology based Motion SYnthesis for dexterous manipulation (EU FP7 2011-2014)

|

The aim of TOMSY is to enable a generational leap in the techniques and scalability of motion synthesis algorithms. We propose to do this by learning and exploiting appropriate topological representations and testing them on challenging domains of flexible, multi-object manipulation and close contact robot control and computer animation. Traditional motion planning algorithms have struggled to cope with both the dimensionality of the state and action space and generalisability of solutions in such domains. This proposal builds on existing geometric notions of topological metrics and uses data driven methods to discover multi-scale mappings that capture key invariances - blending between symbolic, discrete and continuous latent space representations. We will develop methods for sensing, planning and control using such representations. Publications

|

PACO-PLUS: Perception, Action and COgnition through learning of object-action complexes (EU FP6 2007-2010)

|

The EU project PACO-PLUS brings together an interdisciplinary research team to design and build cognitive robots capable of developing perceptual, behavioural and cognitive categories that can be used, communicated and shared with other humans and artificial agents. In my part of the project, we are interested in programming by demonstration applications, in which a robot learns how to perform a task by watching a human do the same task. This involves learning about the scene, objects in the scene, and actions performed on those objects. It also involves learning grammatical structures of actions and objects involved in a task. Publications

|