SONAO

Robust Non-Verbal Expression in Virtual Agents and Humanoid Robots: New Methods for Augmenting Stylized Gestures with Sound

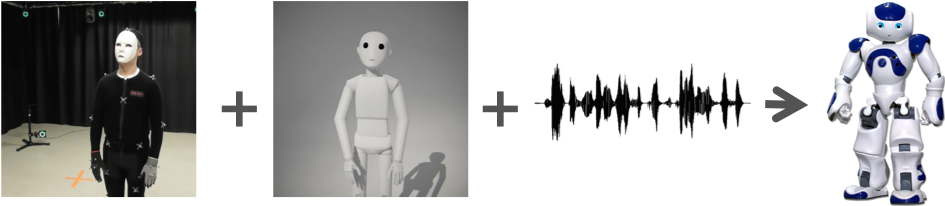

Expression capabilities in current humanoid robots are limited because of the low number of available degrees of freedoms compared to humans. Body motion can be successfully communicated with very simple graphical representations (i.e. point-light display) and cartoonish sounds.

The aim of this project is to establish new methods based on sonification of simplified movements for achieving a robust interaction between users and humanoid robots and virtual agents, by combining competences of the research team members in the fields of social robotics, sound and music computing, affective computing, and body motion analysis. We will engineer sound models for implementing effective mappings between stylized body movements and sound parameters that will enable an agent to express body motion high-level qualities through sound. These mappings are paramount for supporting feedback to and understanding body motion.

The project will result in the development of new theories, guidelines, models, and tools for the sonic representation of body motion high-level qualities in interactive applications. This work is part of the growing research fields known as data sonification, interactive sonification, embodied cognition, multisensory perception, non-verbal and gestural communication in robots.

Results

|

Perception of Mechanical Sounds Inherent to Expressive Gestures of a NAO Robot Supplementary material data, sounds and videos |

|

From Vocal-Sketching to Sound Models Supplementary material code, transcriptions, sounds and videos |

|

Sonic Characteristics of Robots in Films Supplementary material videos |

Team

Funding

- KTH Small Visionary Project grant (2016)

- Swedish Research Council, grant 2017-03979

- NordForsk’s Nordic University Hub “Nordic Sound and Music Computing Network\NordicSMC'', project number 86892.