Mixed Reality and Mobility

Interaction in the urban environment primarily takes place in motion. However, today's interaction techniques are not tailored to the specific needs of interactions on-the-go. In addition to the lack of emphasis on mobile interactions, these interaction techniques largely ignore the skills and dexterity that we display in our daily interactions with the physical world. Urban drift is unavoidable and by 2050 two-thirds of the world's population will be living in cities. This will pose a need to create a safe and user-friendly interaction in urban environments. In particular, vulnerable road users, who are less protected compared to car drivers even nowadays, would require systems and interaction concepts to facilitate safe and effortless mobility. We contribute to design and construction of technical prototypes, methodology, and self-driving micro-mobility using Mixed Reality in the context of HCI, which is covered by the following areas:

- assistance systems for vulnerable road users,

- evaluation methods and environments (to investigate systems designed in (1)), and

- self-driving micro-mobility.

Assistance Systems for Vulnerable Road Users

We work on an interaction on-and-around the body and recognition of a physiological state to facilitate fast, accurate and pleasant interactions on-the-go. We focus on the design of user interfaces to assist vulnerable road users in dangerous situations in urban environments. For this, we build on the success of using multimodal and mixed reality user interfaces to provide them necessary assistance on-the-go, e.g., via haptic feedback, AR and Head-up displays. Additional researched approaches are going to be transferred into prototypes, which implicitly measure the condition of vulnerable road users. We employ smart glasses, ECG, and EEG, with the previously developed methods and sensors, for example to offer in-situ support in Augmented and Virtual Reality interfaces. For example, we identify whether a cyclist is feeling tired and does not pay attention to a road situation.

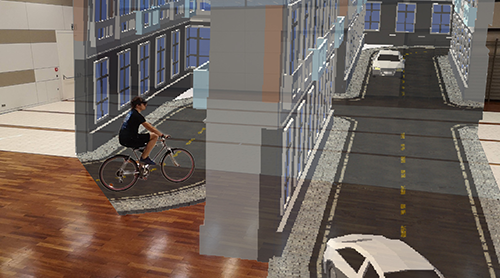

Evaluation Methods and Environments

We focus on evaluation methods and environments necessary to create realistic, safe, and immersive conditions for investigation of systems. We look into how to make existing laboratory evaluation settings as close to reality as possible without introducing discomfort, e.g., motion sickness in virtual reality simulators. Additionally, we focus on the gradual shift towards real world conditions by adding mixed reality interfaces, e.g., mimicking dangerous road scenarios via AR and VR technology while cycling on a real bicycle.

Self-Driving Micro-Mobility

Future urban environments will introduce a wide range of automated and self-driving vehicles. Current research in HCI focuses primarily on the self-driving cars, leaving self-driving micro-mobility behind the brackets. Self-driving micro-mobility will provide assistance and inclusion in many application areas, such as post and food delivery, accessible cycling, and shared bicycles and e-Scooters. We investigate the usage of multimodal and mixed reality user interfaces to create understandable and effortless interaction between users and self-driving mobility, e.g., a self-driving bicycle with VR glasses.

Contact