VINNOVA PROSENSE

Period: 2021-2024

PI: Patric Jensfelt, John Folkesson and Jana Tumova

Coordinator: Scania

Autonomous driving is a disruption of the classical transportation sector and offers great potential to enhance road safety and to create new business models. To thrive in this innovative landscape, Scania intends to focus on novel technical solutions that increase the safety, robustness, and scalability of autonomous vehicles. One of the key enablers of such an autonomous driving system is scene perception, i.e., the ability to comprehend the surrounding environment through sensors’ data. The accuracy and the level of detail of this comprehension are crucial for safe motion planning of autonomous vehicles.

The work at KTH will focus on two work packages. In the first, WP4 - Multi-source fusion for robust real-time scene perception, we will focus on building the local scene modal that aggregates information from multiple sensors and algorithms as well scene metadata. By combining perceived information with, for example, preloaded high density maps the local scene model gives a more complete view of the world than possible otherwise. The local scene model maintains a list of objects that are tracked. Objects detected/tracked by different sensors and algorithms will be merged and their classification results will be combined. In WP5 - Proactive context aware object perception, the local scene model is exploited to interpret and predict a particular situation. This will include novel combinations of various inference approaches. The goal is to, by the end of the project, be part of an integrated system that can use logical reasoning to detect otherwise undetectable objects.

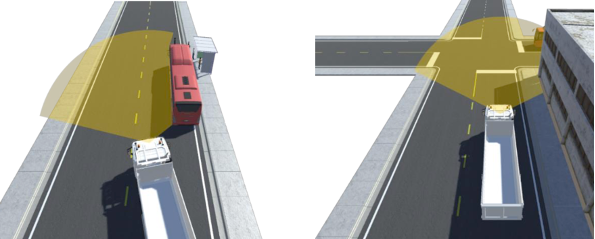

The images above represent two scenarios that we address in the project. On the left the ego vehicle is overtaking a bus that has stopped at a station. By combining information from the sensors on the vehicle with information from scene metadata about the location of bus stops the ego vehicle can create a rich local scene model that supports perceiving what the sensors themselves would require more data to detect. On the right the ego vehicle is near an intersection. The building on the right occludes the road approaching the intersection from the right. Again, by combining sensor information and reasoning based on the local scene model the red bus approaching from the right is more likely to be detected.

Involved people at KTH