PhD Research

Voice, Embodiment, and Autonomy as Identity Affordances

Perceived robot identity has not been discussed thoroughly in Human-Robot Interaction. In particular, very few works have explored how humans tend to perceive robots that migrate through a variety of media and devices. In this paper, we discuss some of the open challenges for artificial robot identity stemming from the robotic features of voice, embodiment, and autonomy. How does a robot’s voice affect perceived robot gender identity, and can we use this knowledge to fight injustice? And how do robot autonomy and decisions affect the mental image humans form of the robot? These, among others, are open questions we wish to bring researchers’ and designers’ attention to, in order to influence best practices on the timely topic of artificial agent identity.

Embodiment Effects in Interactions with Failing Robots

The increasing use of robots in real-world applications will in- evitably cause users to encounter more failures in interactions. While there is a longstanding effort in bringing human-likeness to robots, how robot embodiment affects users’ perception of failures remains largely unexplored. In this paper, we extend prior work on robot failures by assessing the impact that embodiment and failure severity have on people’s behaviours and their perception of robots. Our findings show that when using a smart-speaker embodiment, failures negatively affect users’ intention to frequently interact with the device, however not when using a human-like robot embodiment. Additionally, users significantly rate the human-like robot higher in terms of perceived intelligence and social presence. Our results further suggest that in higher severity situations, human-likeness is distracting and detrimental to the interaction. Drawing on quantitative findings, we discuss benefits and drawbacks of embodiment in robot failures that occur in guided tasks.

Behavioural Responses to Robot Conversational Failures

Humans and robots will increasingly collaborate in domestic environments which will cause users to encounter more failures in interactions. Robots should be able to infer conversational failures by detecting human users’ behavioural and social signals. In this paper, we study and analyse these behavioural cues in response to robot conversational failures. Using a guided task corpus, where robot embodiment and time pressure are manipulated, we ask human annotators to estimate whether user affective states differ during various types of robot failures. We also train a random for- est classifier to detect whether a robot failure has occurred and compare results to human annotator benchmarks. Our findings show that human-like robots augment users’ reactions to failures, as shown in users’ visual attention, in comparison to non-human- like smart-speaker embodiments. The results further suggest that speech behaviours are utilised more in responses to failures when non-human-like designs are present. This is particularly important to robot failure detection mechanisms that may need to consider the robot’s physical design in its failure detection model.

Towards Adaptive and Least-Collaborative-Effort Social Robots

In the future, assistive social robots will collaborate with humans in a variety of settings. Robots will not only follow human orders but will likely also instruct users during certain tasks. Such robots will inevitably encounter user uncertainty and hesitations. They will continuously need to repair mismatches in common ground in their interactions with humans. In this work, we argue that social robots should instruct humans following the principle of least- collaborative-effort. Like humans do when instructing each other, robots should minimise information efficiency over the benefits of collaborative interactive behaviour. In this paper, we first introduce the concept of least-collaborative-effort in human communication and then discuss implications for design of instructions in human- robot collaboration.

Multimodal Human-Robot Interaction

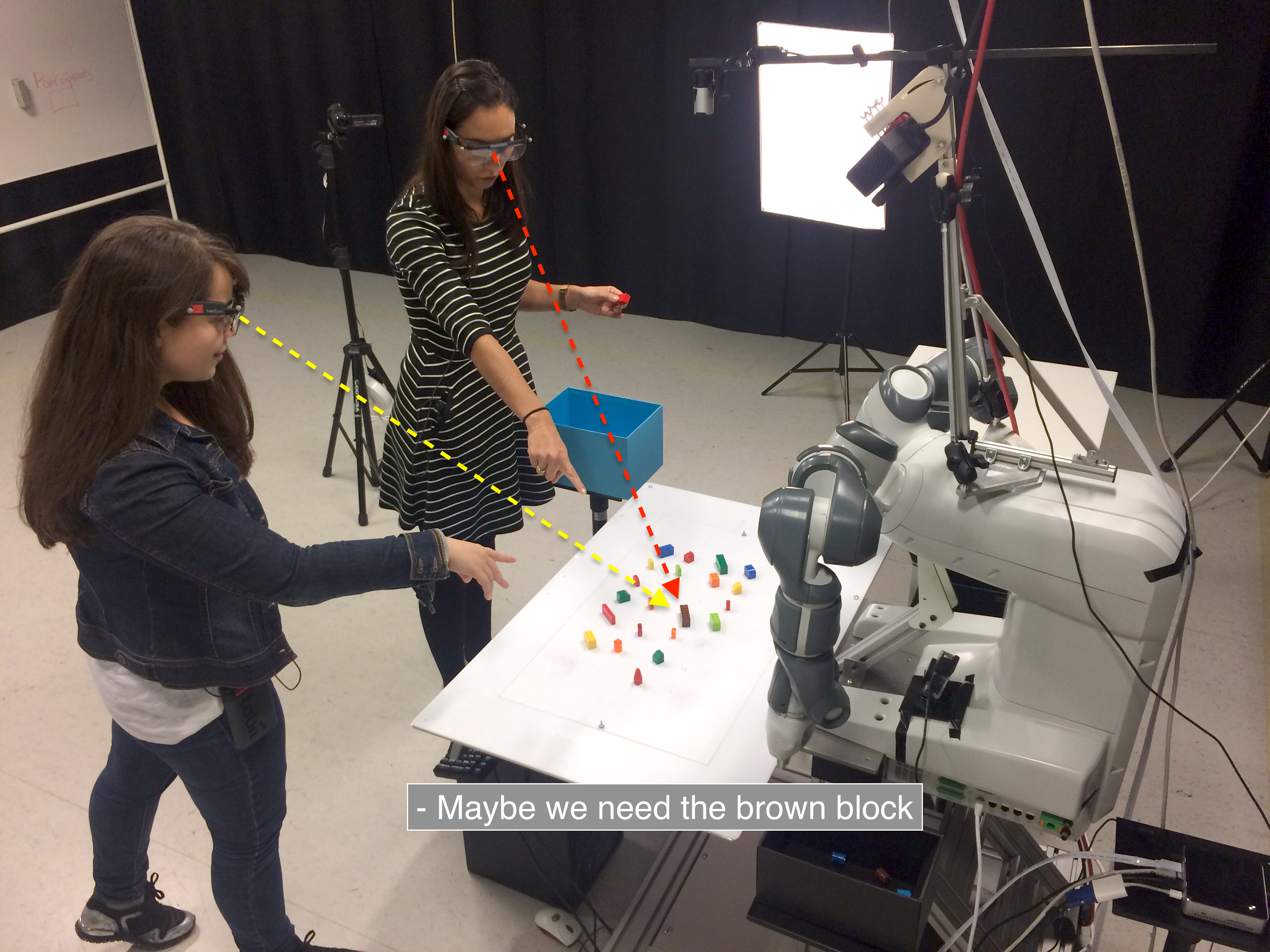

Multimodal Reference Resolution

Modelling visual attention and natural language references to objects. We developed a likelihood model of object saliency given the proportion of eye gaze to objects during referring expressions (top right corner).

The eye gaze in 3d space is automatically detected and annotated to the available targets in the room. The speech transcriptions and syntactic parsing of natural language are also displayed in real time (top left corner).

Humans use pragmatic feedback to disambiguate references to objects in the shared space of attention. How do robots disambiguate object references to resolve word-referent mapping? We use humans' eye-gaze direction and deictic expressions to obtain knowledge about the world surrounding the robot.

PROJECTS

FACT

The focus of FACT is on providing safe and flexible feedback in unforeseen situations, enhancement of human-robot cooperation and learning from experience. The project will develop a robot that intelligently assists workers during production and the interaction is based on visual feedback and natural communication.

BabyRobot

The crowning achievement of human communication is our unique ability to share intentionality, create and execute on joint plans. Using this paradigm we model human-robot communication as a three-step process: sharing attention, establishing common ground and forming shared goals. Prerequisites for successful communication are being able to decode the cognitive state of people around us (intention-reading) and to build trust.