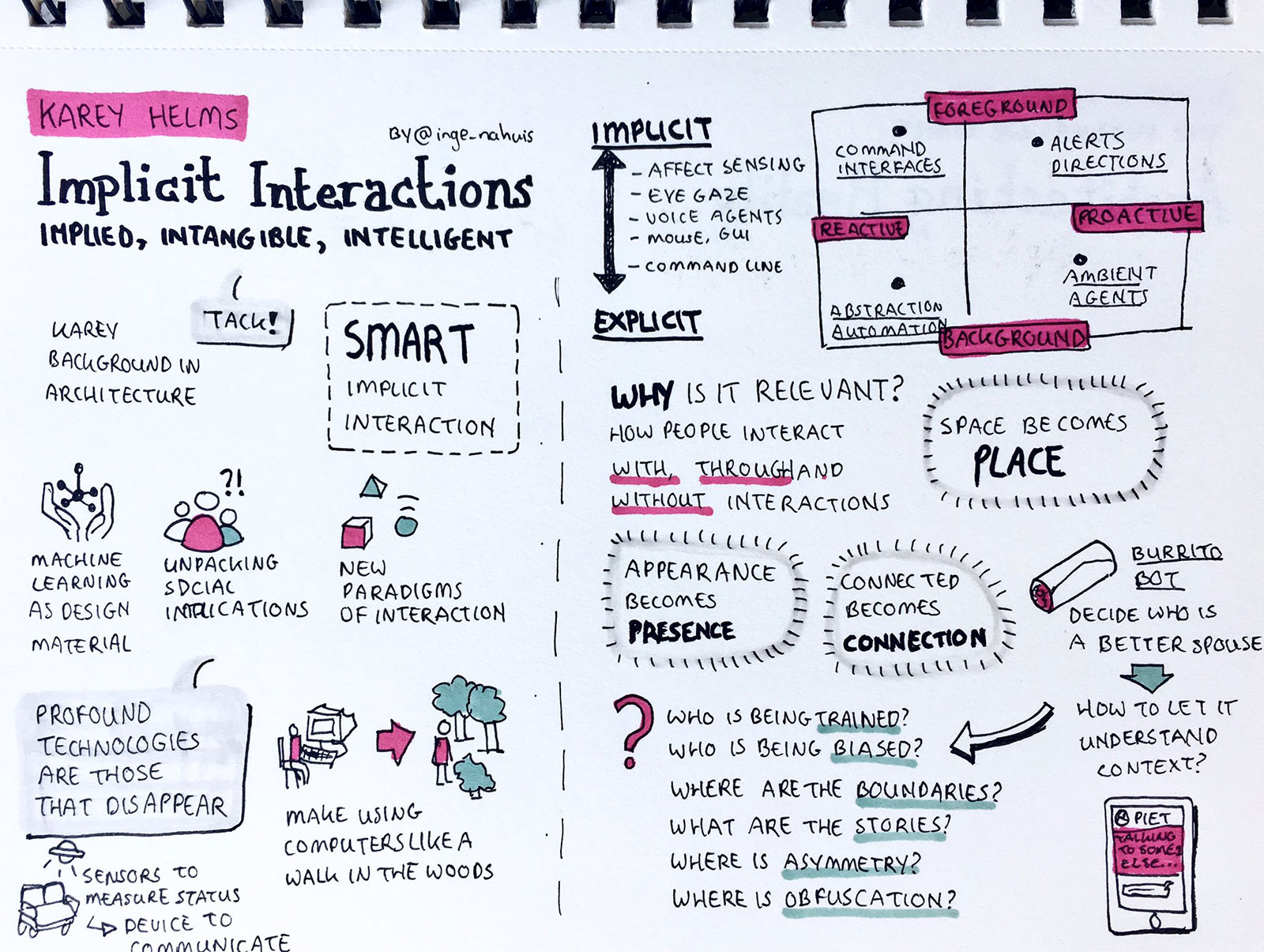

Smart Implicit Interaction

The “Internet of things” offers much potential in terms of automating and hiding much of the tedium of our everyday lives. It is predicted to revolutionise logistics, transportation, electricity consumption in our homes, connectivity - even the management of homes.

Yet these systems are beset with manifest human interaction problems The fridge warns you with a beep if you leave the door open, the washing machine signals when it is finished, or even chainsaws now warns you when you have been using them for too long. Each individual system has been designed with a particular, limited, interaction model: the smart lighting system in your apartment has not been designed for the sharing economy, the lawn mower robot might run off and leave your garden. Different parts of your entertainment system turn the volume up and down and fail to work together. Each 'smart object comes with its own form of interaction, its own mobile app, its own upgrade requirements and its own manner of calling for users’ attention. Interaction models have been inherited from the desktop-metaphor, and sometimes mobile interaction have their own apps that use non-standardised, icons, sounds or notification frameworks. When put together, the current forms of smart technology do not blend, they cannot interface one-another, and most importantly, as end-users we have to learn how to interact with them each time, one by one.

In some senses this is like personal computing before the desktop metaphor, the Internet before the web, or mobile computing before touch interfaces. In short, IoT lacks its killer interface paradigm.

This project is built around developing a new interface paradigm that we call smart implicit interaction. Implicit interactions stay in the background thriving on data analysis of speech, movements and other contextual data, avoiding unnecessarily disturbing us or grabbing our attention. When we turn to them, depending on context and functionality, they either shift into an explicit interaction – engaging us in a classical interaction dialogue (but starting from analysis of the context at hand) – or they continue to engage us implicitly using entirely different modalities that do not require an explicit dialogue – that is through the ways we move or engage in other tasks, the smart objects responds to us. One form of implicit interaction we have experimented with is when mobile phones listen to surrounding conversation and continuously adapt to what might be a relevant starting point once the user decides to turn to it. As the user activates the mobile, we can imagine how the search app already has search terms from the conversation inserted, the map app shows places discussed in the conversation, or if the weather was mentioned and the person with the mobile was located in their garden, the gardening app may have integrated the weather information with the sensor data from the humidity sensor in your garden to provide a relevant starting point. This is of course only possible through providing massive data sets and making continuous adaptations to what people say, their indoor and outdoor location, their movements and any smart objects in that environment – thriving off the whole ecology of artefacts, people and their practices.

Team

Funding

SSF

Project duration

2016 - 2021