Projects

Permanent Activity

MSc projects at KTH in collaboration with industry (since 2007)

|

Many MSc projects at KTH are conducted in collaboration with industry or other universities and research institutes. I have the pleasure of examining or supervising a number of such projects every year. Such external collaborations are always stimulating, and often lead to publications and further collaboration. Recent publications with external MSc students

|

Current Projects

Evaluation of generative models (WASP 2024-present)

|

This project is part of the WARA Media and Language and a collaboration with the company Electronic Arts. Generative models will revolutionize many industries and professions, with applications like programming assistants already in use. This raises a need for reliable and automated metrics that measure, for example, method robustness and appropriateness. Understanding quality is particularly crucial in domains less intuitive to the average user than images and text, which might require expert evaluation of each generated sample. Currently, only a few automated metrics exist, and their correlation with human judgment is debatable. Collaborators

Publications |

ANITA: ANImal TrAnslator (VR 2024-present)

|

|

In recent years there has been an explosive growth in neural network based algorithms for interpretation and generation of natural language. One task that has been addressed successfully using neural approaches is machine translation from one language to another.

The goal of the project proposed here is to make an automated interpreter of animal communicative behavior to human language, in order to allow humans to get an insight into the mind of animals in their care. In this project we focus on the dog species, and will potentially explore common denominators with other species such as horse and cattle. Despite the high potential impact, automated animal behavior recognition is still an undeveloped field. The reason is not the signal itself; the dog species has developed for thousands of years together with humans, and have rich communication and interaction both with humans and with other animal individuals. The main difference from the human language field is instead the lack of data; large data volume is a key success factor in training large neural language models. Thus, data collection is an important venture in this project. Using this data, we propose to develop a deep generative approach to animal behavior recognition from video. Collaborators

|

OrchestrAI: Deep generative models of the communication between conductor and orchestra (WASP, SeRC 2023-present)

|

In this project, which is part of the WARA Media and Language and a collaboration with the Max Planck Institute for Empirical Aesthetics, we build computer models of the processes by which humans communicate in music performance, and use these to 1) learn about the underlying processes, 2) build different kinds of interactive applications. We focus on the communication between a conductor and an orchestra, a process based on the non-verbal communication of cues and instructions via the conductor’s hand, arm and upper body motion, as well as facial expression and gaze pattern. In the first part of the project, a museum installation funded by SeRC is designed for the omni-theater Wisdome Stockholm at Tekniska Museet, in collaboration with Berwaldhallen/Swedish Radio Symphony Orchestra and IVAR Studios. Collaborators

Publications

|

The relation between motion and cognition in infants (SeRC 2023-present)

|

In this project, which is part of the SeRC Data Science MCP and a collaboration with the Department of Women’s and Children’s health at Karolinska Institutet, we study the relation between motion patterns and cognition and brain function in infants . The currently primary application is detection of motor conditions in neonates, but we will also study more general connections between motion and future development of cognition and language. Collaborators

Publications |

Generative AI for the creation of artificial spiderweb (WASP, DDLS 2023-present)

|

This project is a collaboration with a group in veterinary biochemistry at the Swedish University of Agricultural Sciences, whose goal is to create artificial spider web - a protein based, highly durable and strong material. Our contribution to the project is to develop deep generative methods for predicting material properties given the protein composition in the raw material. Collaborators

Publications

|

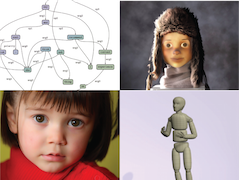

UNCOCO: UNCOnscious COmmunication (WASP 2023-present)

|

This project, which is part of the WARA Media and Language and a collaboration with the Perceptual Neuroscience group at KI, entails two contributions. Firstly, we develop a 3D embodied, integrated representation of head pose, gaze and facial micro expression, that can be extracted from a regular 60 Hz video camera and a desk-mounted gaze sensor. The embodied, integrated 3D representation of head pose, gaze and facial micro expression provides a preprocessing step to the second contribution, a deep generative model for inferring the latent emotional state of the human from the non-verbal communicative behavior. The model is employed in three different contexts: 1) estimating user affect for a digital avatar, 2) analyzing human non-verbal behavior connected to sensor stimuli, e.g., quantify approach/avoidance motor response to smell, 3) estimating frustration in a driving scenario. Collaborators

Publications |

STING: Synthesis and analysis with Transducers and Invertible Neural Generators (WASP 2022-present)

|

Human communication is multimodal in nature, and occurs through combinations of speech, The STING NEST, part of the WARA Media and Language, intends to change this state of affairs by uniting synthesis and analysis with transducers and invertible neural models. This involves connecting concrete, continuous valued sensory data such as images, sound, and motion, with high level, predominantly discrete, representations of meaning, which has the potential to endow synthesis output with human understandable highlevel explanations, while simultaneously improving the ability to attach probabilities to semantic representations. The bidirectionality also allows us to create efficient mechanisms for explainability, and to inspect and enforce fairness in the models. Collaborators

Publications

|

MARTHA: MARkerless 3D capTure for Horse motion Analysis (KTH, FORMAS 2020-present)

|

|

Lameness in horses is a sign of disease or injury, and associated with experiences of pain for the animal. Changed behavior, body pose and motion pattern accompany lameness and many other diseases. Caretakers have difficulties in recognizing early signs of disease, since these signs are subtle and since efficient observation of animals takes resources and time. At the same time, it is crucial to detect these early signs, as the injury that cause them is easy to treat at this early stage, but will potentially be lethal if untreated. A system for automatic detection of lameness would therefore have a huge positive impact on horse welfare. The developed method will require data. Firstly, we will record 3D motion capture data and time-correlated video in controlled indoor settings. This data will be used to train and evaluate the developed 3D shape, pose and motion model. Secondly, we will record, as well as leverage previous recordings of, horses with induced lameness or with clinically obtained ground truth lameness diagnoses. This data will be used to train methods for recognition and localization of orthopaedic injuries, and for detection of pain-related behavior. Collaborators

Publications

|

Past Projects

HiSS: Humanizing the Sustainable Smart city (Digital Futures 2019-2024)

|

The overarching objective is to improve our understanding of how human social behavior shapes sustainable smart city design and development through multi-level interactions between humans and cyber agents. Our key hypothesis is that human-social wellbeing is the main driver of smart city development, strongly influencing human-social choices and behaviors. This hypothesis will be substantiated through mathematical and computational modelling, which spans and links multiple scales, and tested by means of several case studies set in the Stockholm region as part of the Digital Demo Stockholm initiative. Collaborators

Publications

|

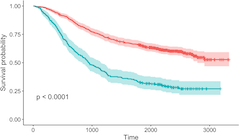

Variational Approximations and Inference for survival analysis and joint modeling (SeRC 2019-2022)

|

This project, which is part of the SeRC eCPC MCP, is a collaboration with the Biostatistics group at the Department of Medical Epidemiology and Biostatistics. Together we develop Variational methods for more accurate prediction of survival probability. Collaborators

Publications

|

AIVIA: AI for Viable Cities (VINNOVA 2019-2020)

|

This is a subproject of the VINNOVA project Viable Cities, where we wrote a series of popular scientific essays on AI and cities of the future. Collaborators

|

EquineML: Machine Learning methods for recognition of the pain expressions of horses (VR, FORMAS 2017-2022)

|

Recognition of pain in horses and other animals is important, because pain is a manifestation of disease and decreases animal welfare. Pain diagnostics for humans typically includes self-evaluation and location of the pain with the help of standardized forms, and labeling of the pain by an clinical expert using pain scales. However, animals cannot verbalize their pain as humans can, and the use of standardized pain scales is challenged by the fact that animals as horses and cattle, being prey animals, display subtle and less obvious pain behavior - it is simply beneficial for a prey animal to appear healthy, in order lower the interest from predators. The aim of this project is to develop methods for automatic recognition of pain in horses, with the help of Computer Vision. Collaborators

Publications

|

Automatic visual understanding for visually impaired persons (Promobilia 2017-2022)

|

In this project, we aim to build an automatic image understanding system that can perform real-time robust image understanding to aid visually impaired persons. Collaborators

Publications

|

Causal Healthcare (SeRC, KTH 2016-2022)

|

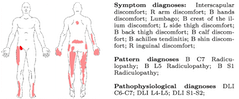

In this project, which is part of the SeRC Data Science MCP, we develop Machine Learning methods that discover causal structures from medical data, for automatic decision support to medical doctors in their work to diagnose different types of injuries and illnesses. The purpose is to determine the underlying causes of observed symptoms and measurements, making it possible to semi-automatically reason about the potential effects of different actions, and propose suitable treatment. Collaborators

Publications

|

Aerial Robotic Choir - expressive body language in different embodiments (KTH, 2016-2021)

|

During ancient times, the choir (χορος, khoros) had a major function in the classical Greek theatrical plays - commenting on and interacting with the main characters in the drama. We aim to create a robotic choir, invited to take part in a full-scale operatic performance in Rijeka, Croatia, September 2020 - thereby grounding our technological research in an ancient theatrical and operatic tradition. In our re-interpretation, the choir will consist of a swarm of small flying drones that have perceptual capabilities and thereby will be able to interact with human singers, reacting to their behavior both as individual agents, and as a swarm. Collaborators

Publications

|

EACare: Embodied Agent to support elderly mental wellbeing (SSF, 2016-2021)

|

The main goal of the multidisciplinary project EACare is to develop an embodied agent – a robot head with communicative skills – capable of interacting with especially elderly people at a clinic or in their home, analyzing their mental and psychological status via powerful audiovisual sensing and assessing their mental abilities to identify subjects in high risk or possibly at the first stages of cognitive decline, with a special focus on Alzheimer’s disease. The interaction is performed according to the procedures developed for memory evaluation sessions, the key part of the diagnostic process for detecting cognitive decline. Collaborators

Publications

|

Data-driven modelling of interaction skills for social robots (ICT-TNG 2016-2018)

|

This project aims to investigate fundamentals of situated and collaborative multi-party interaction and collect the data and knowledge required to build social robots that are able to handle collaborative attention and co-present interaction. In the project we will employ state-of-the art motion- and gaze tracking on a large scale as the basis for modelling and implementing critical non-verbal behaviours such as joint attention, mutual gaze and backchannels in situated human-robot collaborative interaction, in a fluent, adaptive and context sensitive way. Collaborators

Publications

|

SocSMCs: Socialising SensoriMotor Contingencies (EU H2020 2015-2018)

|

As robots become more omnipresent in our society, we are facing the challenge of making them more socially competent. However, in order to safely and meaningfully cooperate with humans, robots must be able to interact in ways that humans find intuitive and understandable. Addressing this challenge, we propose a novel approach for understanding and modelling social behaviour and implementing social coupling in robots. Our approach presents a radical departure from the classical view of social cognition as mind- reading, mentalising or maintaining internal rep-resentations of other agents. This project is based on the view that even complex modes of social interaction are grounded in basic sensorimotor interaction patterns. SensoriMotor Contingencies (SMCs) are known to be highly relevant in cognition. Our key hypothesis is that learning and mastery of action-effect contingencies are also critical to enable effective coupling of agents in social contexts. We use "socSMCs" as a shorthand for such socially relevant action-effect contingencies. We will investigate socSMCs in human-human and human-robot social interaction scenarios. The main objectives of the project are to elaborate and investigate the concept of socSMCs in terms of information-theoretic and neurocomputational models, to deploy them in the control of humanoid robots for social entrainment with humans, to elucidate the mechanisms for sustaining and exercising socSMCs in the human brain, to study their breakdown in patients with autism spectrum disorders, and to benchmark the socSMCs approach in several demonstrator scenarios. Our long term vision is to realize a new socially competent robot technology grounded in novel insights into mechanisms of functional and dysfunctional social behavior, and to test novel aspects and strategies for human-robot interaction and cooperation that can be applied in a multitude of assistive roles relying on highly compact computational solutions. My part of the project comprised human motion and activity forecasting. Collaborators

Publications

|

Analyzing the motion of musical conductors (KTH, 2014-2017)

|

Classical music sound production is structured by an underlying manuscript, the sheet music, that specifies into some detail what will happen in the music. However, the sheet music specifies only up to a certain degree how the music sounds when performed by an orchestra; there is room for considerable variation in terms of timbre, texture, balance between instrument groups, tempo, local accents, and dynamics. In larger ensembles, such as symphony orchestras, the interpretation of the sheet music is done by the conductor. We propose to learn a simplified generative model of the entire music production process from data; the conductor's articulated body motion in combination with the produced orchestra sound. This model can be exploited for two applications; the first is "home conducting" systems, i.e., conductor-sensitive music synthesizers, the second is tools for analyzing conductor-orchestra communication, where latent states in the conducting process are inferred from recordings of conducting motion and orchestral sound. Collaborators

Publications

|

FOVIAL: FOrensic VIdeo AnaLysis - finding out what really happened (VR, EU Marie Curie 2013-2016)

|

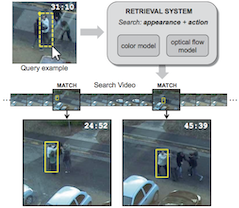

In parallel to the massive increase of text data available on the Internet, there has been a corresponding increase in the amount of available surveillance video. There are good and bad aspects of this. One undeniably positive aspect is that it is possible to gather evidence from surveillance video when investigating crimes or the causes of accidents; forensic video analysis. Forensic video investigations are now carried out manually. This involves a huge and very tedious effort; e.g., 60 000 hours of video in the Breivik investigation. The amount of surveillance data is also constantly growing. This means that in future investigations, it will no longer be possible to go through all the evidence manually. The solution is to automate parts of the process. In this project we propose to learn an event model from surveillance data, that can be used to characterize all events in a new set of surveillance recorded from a camera network. Our model will also represent the causal dependencies and correlations between events. Using this model, or explanation, of the data from the network, a semi-automated forensic video analysis tool with a human in the loop will be designed, where the user chooses a certain event, e.g., a certain individual getting off a train, and the system returns all earlier observations of this individual, or all other instances of people getting off trains, or all the events that may have caused or are correlated with the given "person getting off train" event. Collaborators

Publications

|

RoboHow (EU FP7 2013-2016)

|

Robohow aims at enabling robots to competently perform everyday human-scale manipulation activities - both in human working and living environments. In order to achieve this goal, Robohow pursues a knowledge-enabled and plan-based approach to robot programming and control. The vision of the project is that of a cognitive robot that autonomously performs complex everyday manipulation tasks and extends its repertoire of such by acquiring new skills using web-enabled and experience-based learning as well as by observing humans. My part of the project comprised object tracking and visuo-haptic object exploration. Collaborators

Publications

|

TOMSY: TOpology based Motion SYnthesis for dexterous manipulation (EU FP7 2011-2014)

|

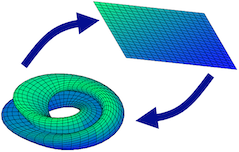

The aim of TOMSY is to enable a generational leap in the techniques and scalability of motion synthesis algorithms. We propose to do this by learning and exploiting appropriate topological representations and testing them on challenging domains of flexible, multi-object manipulation and close contact robot control and computer animation. Traditional motion planning algorithms have struggled to cope with both the dimensionality of the state and action space and generalisability of solutions in such domains. This proposal builds on existing geometric notions of topological metrics and uses data driven methods to discover multi-scale mappings that capture key invariances - blending between symbolic, discrete and continuous latent space representations. We will develop methods for sensing, planning and control using such representations. Collaborators

Publications

|

Gesture-based violin synthesis (KTH, 2011-2012)

|

There are many commercial applications of synthesized music from acoustic instruments, e.g. generation of orchestral sound from sheet music. Whereas the sound generation process of some types of instruments, like piano, is fairly well known, the sound of a violin has been proven extremely difficult to synthesize. The reason is that the underlying process is highly complex: The art of violin-playing involves extremely fast and precise motion with timing in the order of milliseconds. Collaborators

Publications

|

HumanAct: Visual and multi-modal learning of Human Activity and interaction with the surrounding scene (VR, EIT ICT Labs 2010-2013)

|

The overwhelming majority of human activities are interactive in the sense that they relate to the world around the human (in Computer Vision called the "scene"). Despite this, visual analyses of human activity very rarely take scene context into account. The objective in this project is modeling of human activity with object and scene context. The methods developed within the project will be applied to the task of Learning from Demonstration, where a (household) robot learns how to perform a task (e.g. preparing a dish) by watching a human perform the same task. Collaborators

Publications

|

PACO-PLUS: Perception, Action and COgnition through learning of object-action complexes (EU FP6 2007-2010)

|

The EU project PACO-PLUS brings together an interdisciplinary research team to design and build cognitive robots capable of developing perceptual, behavioural and cognitive categories that can be used, communicated and shared with other humans and artificial agents. In my part of the project, we are interested in programming by demonstration applications, in which a robot learns how to perform a task by watching a human do the same task. This involves learning about the scene, objects in the scene, and actions performed on those objects. It also involves learning grammatical structures of actions and objects involved in a task. Collaborators

Publications

|