This blog highlights the contributions made by a group of faculty, researchers, and doctoral students working on Networked Systems aspects. For open positions, please consult our Projects.

We are very happy to announce that Daniel Felipe Perez Ramirez successfully defended his PhD thesis on February 12, 2026! Prof. Magnus Boman (KI) and Nicolas Tsiftes (RISE) have done a great job as the academic co-advisor and industrial advisor, respectively. We are very grateful to Prof. Thiemo Voigt for creating a wonderful research environment for Daniel to thrive at RISE. Prof. Rui Tan was the opponent at the defense, while Assoc. Prof. Amir Payberah served as the Chair. Daniel’s thesis is available online:

This thesis explores the applicability of machine learning to solve resource allocation optimization problems. It demonstrates that GNN-based models with Transformer-like architecture trained on small 10-node networks can robustly generalize to large-scale IoT deployments with up to 1000 nodes (i.e., 100x bigger than those encountered during training), achieving up to 2× resource savings in energy and spectrum utilization while maintaining consistent performance across diverse network topologies.

A few images from the defense and the celebration are below.

Dejan congratulates Daniel, photo credit Amir Payberah.

Dejan congratulates Daniel, photo credit Amir Payberah.

Amir congratulates Daniel.

Amir congratulates Daniel.

Magnus congratulates Daniel.

Magnus congratulates Daniel.

Our upcoming ICLR paper: “KVComm: Enabling Efficient LLM Communication through Selective KV Sharing”

We are very happy to announce that our paper will appear in the Proceedings of the Fourteenth International Conference on Learning Representation (ICLR). The paper title is “KVComm: Enabling Efficient LLM Communication through Selective KV Sharing“, and this is joint work with Xiangyu Shi, Marco Chiesa, Gerald Q. Maguire Jr., and Dejan Kostic (all from KTH). We have already released the source code.

The full abstract is below:

Large Language Models (LLMs) are increasingly deployed in multi-agent systems, where effective inter-model communication is crucial. Existing communication protocols either rely on natural language, incurring high inference costs and information loss, or on hidden states, which suffer from information concentration bias and inefficiency. To address these limitations, we propose KVComm, a novel communication framework that enables efficient communication between LLMs through selective sharing of KV pairs. KVComm leverages the rich information encoded in the KV pairs while avoiding the pitfalls of hidden states. We introduce a KV layer-wise selection strategy based on attention importance scores with a Gaussian prior to identify the most informative KV pairs for communication. Extensive experiments across diverse tasks and model pairs demonstrate that KVComm achieves comparable performance to the upper-bound method, which directly merges inputs to one model without any communication, while transmitting as few as 30% of layers’ KV pairs. Our study highlights the potential of KV pairs as an effective medium for inter-LLM communication, paving the way for scalable and efficient multi-agent systems.

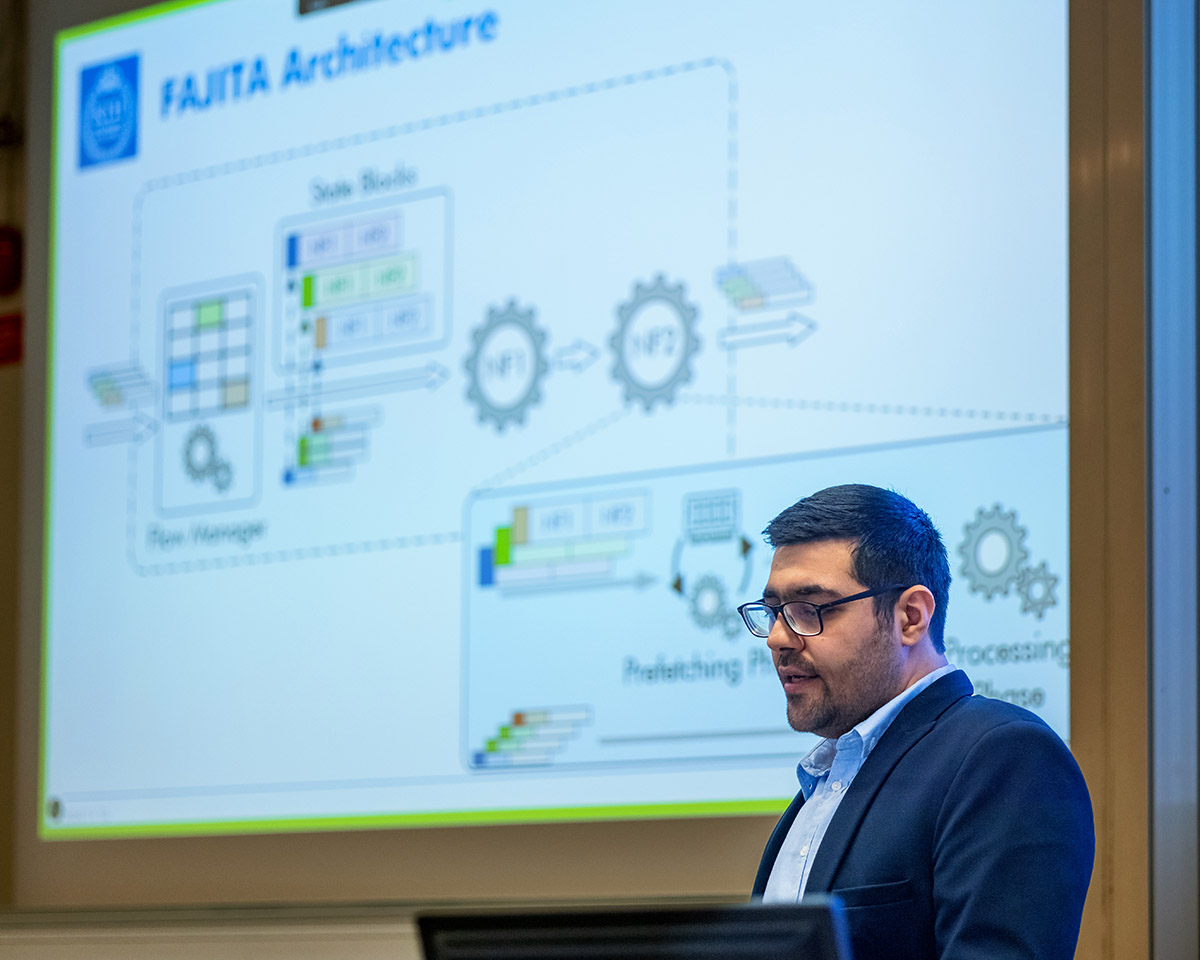

At CoNEXT ’24, Mariano presented our FAJITA paper. This work shows that a commodity server running a chain of stateful network functions can process more than 170 M packets per second (equivalent of 1.4 Tbps if payloads are stored in a disaggregated fashion as in our earlier Ribosome work [NSDI ’23])! Something else that is interesting and perhaps unexpected is that, unless the number of so-called “elephant flows” is very small, spreading incoming packets among the cores using plain Receive Side Scaling (RSS) outperforms existing approaches that perform fine-grained flow accounting and load-balancing. This happens because possible gains get dwarfed by slowdowns in accessing memory.

This is joint work with Hamid Ghasemirahni, Alireza Farshin (now at Nvidia), Mariano Scazzariello (now at RISE), Gerald Q. Maguire Jr., Dejan Kostić, and Marco Chiesa.

Our recording of Mariano’s talk is below:

Data centers increasingly utilize commodity servers to deploy low-latency Network Functions (NFs). However, the emergence of multi-hundred-gigabit-per-second network interface cards (NICs) has drastically increased the performance expected from commodity servers. Additionally, recently introduced systems that store packet payloads in temporary off-CPU locations (e.g., programmable switches, NICs, and RDMA servers) further increase the load on NF servers, making packet processing even more challenging.

This paper demonstrates existing bottlenecks and challenges of state-of-the-art stateful packet processing frameworks and proposes a system, called FAJITA, to tackle these challenges & accelerate stateful packet processing on commodity hardware. FAJITA proposes an optimized processing pipeline for stateful network functions to minimize memory accesses and overcome the overheads of accessing shared data structures while ensuring efficient batch processing at every stage of the pipeline. Furthermore, FAJITA provides a performant architecture to deploy high-performance network functions service chains containing stateful elements with different state granularities. FAJITA improves the throughput and latency of high-speed stateful network functions by ~2.43x compared to the most performant state-of-the-art solutions, enabling commodity hardware to process up to ~178 Million 64-B packets per second (pps) using 16 cores.

We are very happy to announce that Hamid Ghasemirahni successfully defended his PhD thesis (second and final one in the ERC ULTRA project) on November 18, 2024! Marco Chiesa has done a superb job as a co-advisor, and we are very grateful to Prof. Gerald Q. Maguire Jr. for his stellar insights (as usual). Gábor Rétvári was the opponent at the defense, while Paris Carbone served as the Chair. Hamid’s thesis is available online:

In short, this thesis contains the work on Reframer showing a surprising result that deliberately delaying packets can improve the performance of backend servers by up to about a factor of 2 (e.g., those used for Network Function Virtualization). It also includes FAJITA, which shows that a commodity server running a chain of stateful network functions can process more than 170 M packets per second (equivalent of 1.4 Tbps if payloads are stored in a disaggregated fashion as in our earlier Ribosome work [NSDI ’23]!).

A few images from the defense and the celebration are below.

Hamid presenting during the defense (image taken by Dejan Kostic).

Paris congratulates Hamid on the successfully defended PhD thesis (image taken by Dejan Kostic).

Dejan hands the traditional gift to Hamid (image taken by Voravit Tanyingyong).

Group image with colleagues and Dejan (image taken by Voravit Tanyingyong).

Group image (image taken by Voravit Tanyingyong).

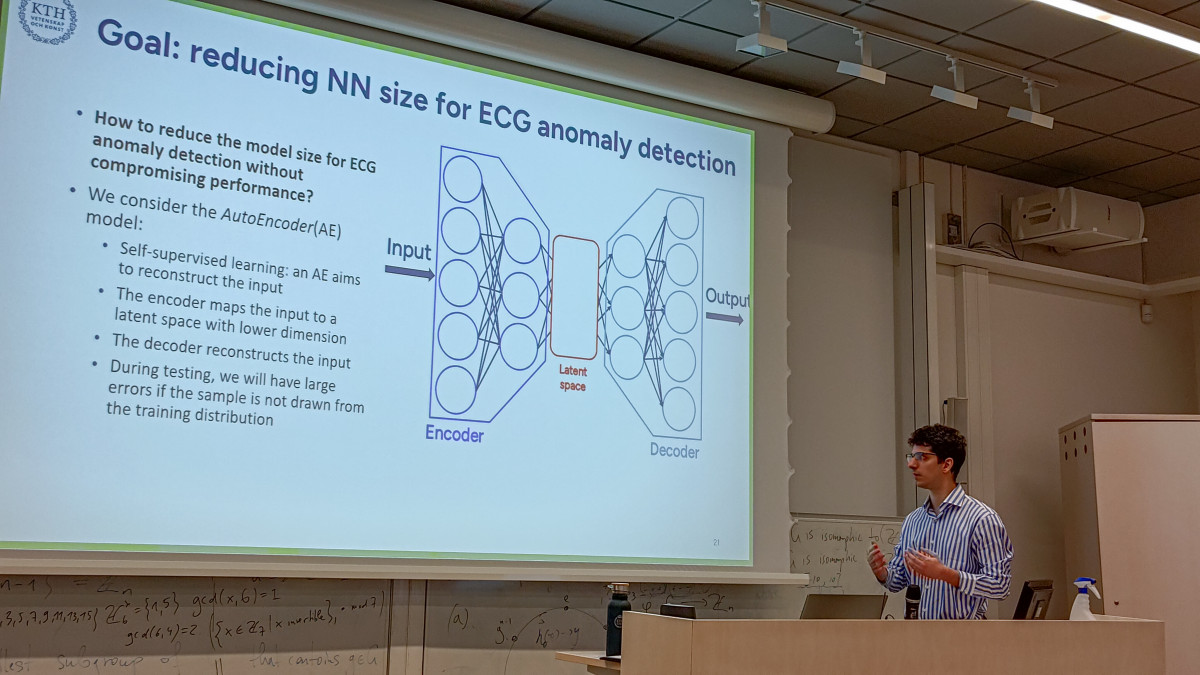

We are happy to announce that Giacomo Verardo successfully defended his licentiate thesis (licentiate is a degree at KTH half-way to a PhD)! As usual, Marco Chiesa has done an excellent job as a co-advisor, and we are truly grateful to Prof. Gerald Q. Maguire Jr. for his stellar insights. Dr. Maxime Sermesant was a superb opponent at the licentiate seminar, with Prof. Vlad Vlassov as the examiner. Giacomo ’s thesis is available online:

“Optimizing Neural Network Models for Healthcare and Federated Learning”

Giacomo presenting during the defense (image taken by Massimo Girondi).

Dejan congratulates Giacomo once Prof. Vlassov announced that Giacomo passed his defense (image taken by Massimo Girondi).

Dejan hands the traditional gift to Giacomo (image taken by Voravit Tanyingyong).

Group image with Networked Systems Laboratory members (image taken by Sanna Jarl).