High-performance Computing

High-Performance Computing

The High-Performance Computing (HPC) group at EECS KTH conducts research in parallel programming models, parallel and distributed algorithms, large-scale HPC applications, performance monitoring, modeling, analysis, optimization, and quantum computing (QC). HPC and supercomputers are integral to advancing scientific research, engineering, and technological innovation across diverse fields. Supercomputers empower researchers and scientists to address once-insurmountable challenges, encompassing simulations of Earth's magnetosphere, weather forecasting, climate modeling, advanced material design, and drug discovery. The immense computational power of supercomputers stems from massive parallelism in compute nodes interconnected by high-speed networks. However, this power brings forth programming, communication, data movement, and I/O challenges at scale, which the HPC group envision to address within the Exascale and post-Exascale Era.

Parallel Computing

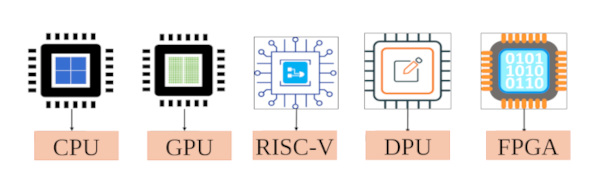

Our research leverages massively parallel computing units, such as GPUs, RISC-V vectors, multi-core CPUs and SIMD to accelerate compute-intensive applications. On a heterogeneous system, power-efficient computing units are explored to offload the computation onto the most suitable units for reduced resource usage and energy efficiency. By co-designing hardware and software techniques, we can develop scalable solutions that address the growing demand in diverse applications ranging from drug discovery, quantum computing, to machine learning.

The research in High-performance and Parallel Computing is mainly performed within two groups lead by two principal investigators:

Courses

-

Applied GPU Programming ( DD2360 )

-

Computational Methods for Electromagnetics ( DD2370 )

-

Introduction to High Performance Computing ( DD2358 )

-

Methods in High Performance Computing ( DD2356 )

-

Quantum Computing for Computer Scientists ( DD2367 )

-

Applied Quantum Machine Learning ( FDD3268 )

-

LLMs for Computer Scientists ( FDD3559 )

-

Parallel Computing: Theory - Hardware – Software ( FDD3003 )

Research Highlights

-

The 2023 ACM Gordon Bell Prize Finalists. 2023.

-

Best Poster Award runner-up at the International Conference on Supercomputing. 2023.

-

Best Paper Award at the International Symposium on Memory Systems. 2019.

-

Best Paper Award at the International Workshop on Accelerators and Hybrid Emerging Systems. 2018.

-

Best Paper Award at the International Conference on Parallel Processing. 2017.

-

Best Paper Award at The EuroMPI conference. 2017.

Projects

VR: System-informed Disaggregated Memory (2023-2027)

Role: PI

The project aims to enable the efficient execution of industrial and scientific workloads on future computing infrastructures with disaggregated memory. The primary approach combines workload characteristics and runtime system states to optimize data placement and movement. This project will conduct four research tasks: composable memory requirements, transient data caching services, along-the-path data reduction, and global coordination.

EU Horizon: OpenCUBE (2023-2026)

Role: Coordinator

OpenCUBE is missioned to deliver the first deployment-ready cloud platform built on processors made in Europe. In synergy with the efforts of the European Processor Initiative (EPI) in designing and developing European chips, the OpenCUBE project aims to develop a full software stack for promoting performance, energy efficiency, and programming efficacy in heterogeneous data centers. Together with pilot use cases, OpenCube seeks to streamline and encourage user adoption toward the forthcoming European cloud platform.

Vinnova: QC-CC Pilot (2024-2025)

Role: KTH PI

This project seeks to establish a systematic pathway for optimizing applications that can efficiently leverage large-scale hybrid Quantum – Classical computing systems. We will develop a hierarchical machine model to encapsulate the distinctive features of components in an integrated QC-CC system, and their interconnects at different granularities. Different interconnects with jitter and synchronization characteristics will be modeled.

EU Horizon: HIGHER (2025-2027)

Role: KTH PI

The HIGHER project will develop the first European energy- efficient OCP-compliant modules utilized in cloud and edge infrastructure, powering a real pre-production architecture, developed, among others, on top of the outcomes of the EPI and EUPilot projects.

Plasma-PEPSC: Plasma Exascale-Performance Simulations CoE - Pushing flagship plasma simulations codes to tackle Exascale-enabled Grand Challenges via performance optimisation and codesign

Funded by EU Horizon EuroHPC, 2023-2027. Coordinator. Plasma-PEPSC aims at enabling four lighthouse plasma simulation codes (BIT, GENE, PIConGPU, Vlasiator) from plasma-material interfaces, fusion, accelerator physics, space physics and important scientific drivers to exploit Exascale supercomputers. Plasma-PEPSC co-designs the four plasma simulation codes with the systems developed within EPI by providing feedback to the design of European processors, accelerators, and pilots and investigating the plasma simulation code changes to exploit these systems with maximum efficiency.

SeRC High-performance Computing Applications and Programming Models

Funded by Swedish e-Science Research Centre SESSI, 2016-2024. KTH Co-PI.

DEEP-SEA : DEEP – Software for Exascale Architectures

Funded by EU Horizon EuroHPC, 2021-2024. KTH PI. DEEP-SEA aims to deliver the programming environment for future European exascale systems, adapting all levels of the software (SW) stack – including low-level drivers, computation and communication libraries, resource management, and programming abstractions with associated runtime systems and tools – to support highly heterogeneous compute and memory configurations and to allow code optimisation across existing and future architectures and systems.

ADMIRE : Adaptive multi-tier intelligent data manager for Exascale

Funded by EU Horizon EuroHPC, 2021-2024. KTH PI. ADMIRE aims to create an active I/O stack that dynamically adjusts computation and storage requirements through intelligent global coordination, malleability of computation and I/O, and the scheduling of storage resources along all levels of the storage hierarchy. It will develop a software-defined framework based on the principles of scalable monitoring and control, separated control and data paths, and the orchestration of key system components and applications through embedded control points.

IO-SEA : IO Software for Exascale Architecture

Funded by EU Horizon EuroHPC, 2021-2024. KTH PI. IO-SEA aims to provide a novel data management and storage platform for exascale computing based on hierarchical storage management (HSM) and on-demand provisioning of storage services. The platform will efficiently make use of storage tiers spanning NVMe and NVRAM at the top all the way down to tape based technologies. System requirements are driven by data intensive use-cases, in a very strict co-design approach.

EPiGRAM-HS : Exascale Programming Models for Heterogeneous Systems

Funded by European Commission, 2018-2021. Coordinator. EPiGRAM-HS aims to develop a new validated programming environment for large-scale heterogeneous computing systems, including accelerators, reconfigurable hardware and low-power microprocessor together with non-volatile and high-bandwidth memories, for enabling applications to run on large-scale heterogeneous systems at maximum performance.

VR: Dancing electrons at a singing comet

Funded by The Swedish Research Council 2018-2021. Principle Investigator.

ESA: CosmoWeather

Funded by The European Space Agency 2020-2021. Principle Investigator.

EPiGRAM :

Funded by European Commission, 2013-2016. Coordinator. EPiGRAM aims to investigates Exascale programming models for HPC and is a partner of the FP7 Exascale flagship project CRESTA. The EPiGRAM project focuses on application scalability as well as performance analysis directly relevant to HPC applications running on Exascale machines.