PROGEST - Production of prosodic prominence: integrating bodily and articulatory gestures

The aim of this project is to increase our understanding of how bodily gestures such as hand strokes, head nods and eyebrow movements interact with articulatory movements of the tongue and jaw and the acoustic speech signal to convey prominence. The project is a collaboration between KTH, Linnaeus University and Lund University.

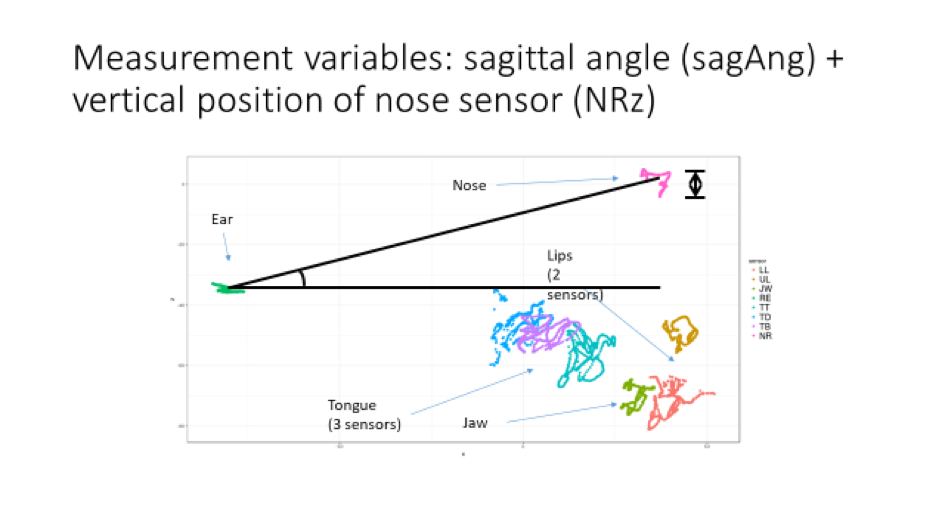

An essential function of prosody in spoken interaction is to highlight important words and phrases and make them more prominent. In recent years, we have begun to understand prosodic prominence as a multimodal phenomenon, composed of verbal and visual prominence markers. Verbal prominence markers are typically identified as sentence-level pitch accents. Bodily gestures such as hand strokes, head nods and eyebrow movements are often aligned with a pitch accent and function as visual markers of prominence. The prominent word itself is often given stronger articulatory gestural movements resulting in both acoustic prominence (louder and longer) and visual prominence (greater movements of the lips and jaw). This project aims at investigating whether and how these different types of prominence markers interact and possibly influence each other. Three types of speech data are being analysed: read television news, unrestricted spontaneous speech and controlled experimental recordings in which motion capture and electromagnetic articulography are used for recording movements of the hands, head, the eyebrows, and the articulators (lips, tongue, jaw). The results will be formulated in terms of a model of multimodal speech production, which will have implications for our general understanding of how speech and gestures are planned and coordinated by the speaker. It will also enable better modeling of speech and gestures in such speech applications as robots and avatars.

Staff:

Gilbert Ambrazaitis (Linnaeus University)

Johan Frid (Lund University)

Funding: VR (Swedish Research Council) 2017-02140

Duration: 2018-01-01 - 2021-12-31