SIGNBOT - Generative AI for Sign Language

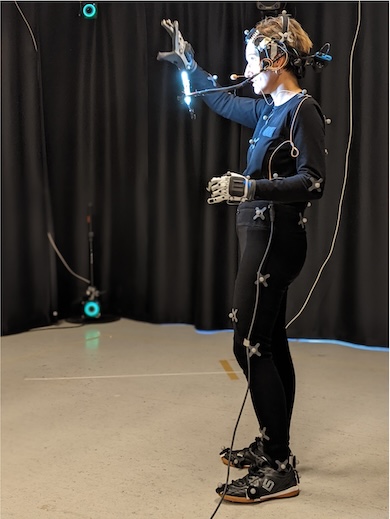

Progress in the processing of spoken languages has advanced rapidly in recent years, but sign language, used by more than 70 million people worldwide, has not seen this development. In this project, we aim to do high-quality generative modelling of sign language using state-of-the-art generative AI models for animation and language. Our unique starting point for this mission comes from our experience in developing state-of-the-art neural motion synthesis techniques and neural end-to-end speech generation systems.

The project is highly interdiciplinary in nature, reflected in the composition of the research team form KTH and Stockholm University that collectively encompasses expertise in multimodal speech and language processing, avatars and embodied agents, neural motion generation, motion capture as well as sign language linguistics, corpus collection and annotation.

Sign languages present unique challenges not found in written or spoken language due to their visuo-spatial nature and highly parallel structure. This makes sign language a suitable problem to approach using generative AI methods, and suggest that a combination of approaches from text, speech, motion, and image domains might be required. The societal significance of this problem cannot be overstated, as solutions could greatly increase accessibility and inclusion for sign language users, providing them with resources and communication modes that the rest of society takes for granted.

Publications

Funding

WASP/WARA ML and Vetenskapsrådet (grant nr. 2023-04548)

Duration

2024-01-01 → 2027-12-31