A Discrete Choice Model - Analyzing non-linear contributions to predictive performance

A machine learning approach that investigates how neural networks' ability to learn non-linear phenomena efficiently can provide increased performance in a four-step model.

This paper aims to build and investigate a discrete choice model on activity-based travel demand using artificial neural networks with non-linear activations. This approach can be seen as an extension in complexity compared to the conventional MNL. By using intermediate layers and many more parameters, variables are allowed to interact with each other in a great number of different combinations to learn the non-linear phenomena present in the data more efficiently.

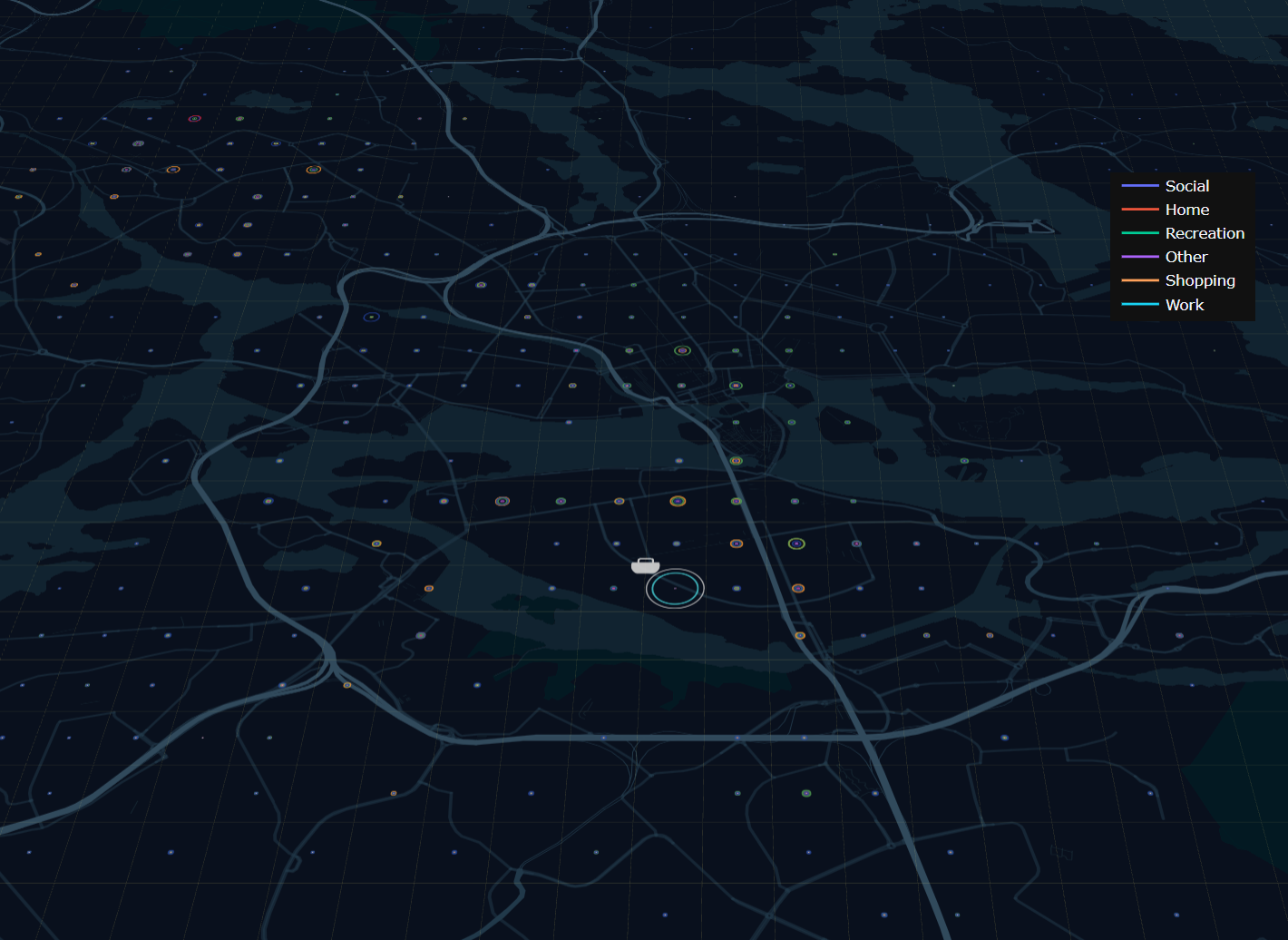

In a four-step model framework, the final model utilizes the three sub-models: generation, distribution, and mode choice. At every 108 10-minute intervals between 05:00 am and 11:00 pm, a simulated agent's decision to in the next time-step stay or take a trip from its selected activity is determined by the generation model. The distribution and mode choice models are used whenever a trip is performed. Tests on hold-out data show how successive non-linear additions in the distribution model increase performance and that the model's suggested structure can simulate travel demand on a very detailed level.